RankLens measures how visible your brand is inside large language models (LLMs). Instead of relying on one-off prompts or proxy signals, RankLens uses an entity-based and multi-sample methodology to quantify how often (and how confidently) AIs recommend you.

The next competitive advantage isn’t just ranking on search—it’s being mentioned more often by AI. RankLens gives you the framework to measure, compare, and improve that visibility with confidence.

Who RankLens Is For

- Marketing agencies & SEO firms proving visibility inside AI systems.

- Brand/PR teams tracking recommendation share and discovery.

- Growth leaders managing multi-location or multi-language visibility.

Core Concepts

Entities – Semantic topics that describe what you do (think: keyword-like concepts). Ex: “Nashville car dealer”, “app for finding new and used cars.”

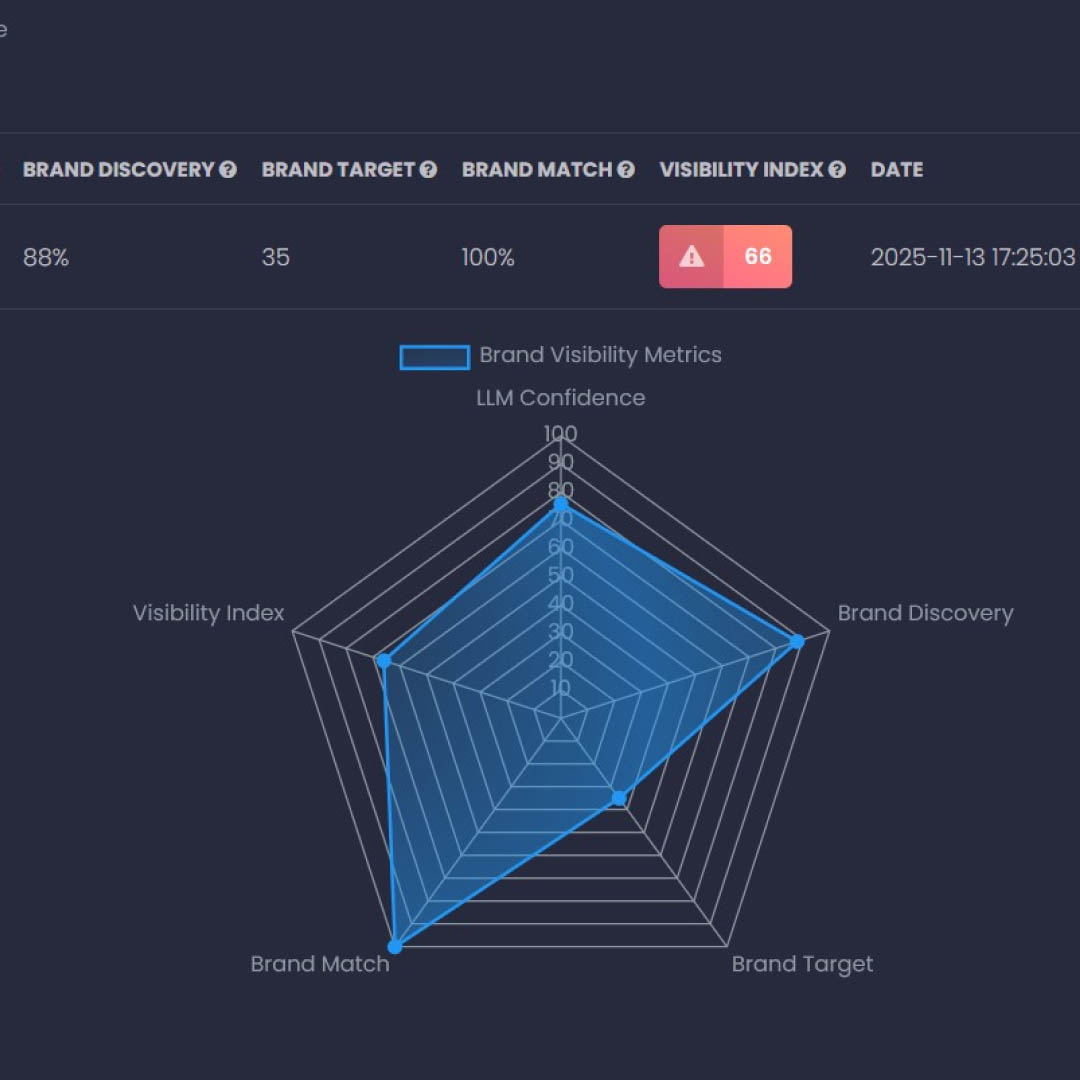

Visibility Index (0–100) – A composite score indicating overall AI visibility for your entities. Higher = more likely to appear in AI responses.

Measured Signals (per entity/engine across samples):

- Rank – Observed placement order within AI recommendations across runs.

- LLM Confidence – How confident the model is in its response.

- Brand Appearance (SoV) – How often your brand shows up (share of mentions) and Share of Voice (SoV): % of all responses the AI assigned to a brand compared to all other brands..

- Brand Discovery – Likelihood of being recommended by the AI.

- Brand Target – Precision/stability of the AI’s recommendation.

- Brand Match – Name/URL matching strength (uses cosine similarity across brand and website variants).

Important: LLM outputs vary. Multiple samples are essential to establish a stable, decision-ready baseline.

Accuracy & Sampling

LLMs are stochastic. Running a single test is not representative. RankLens samples 4×, 8×, or 16× times and aggregates:

- Average/lowest/highest observed rank

- Confidence and appearance frequency

- Discovery and target precision

- Composite Visibility Index

Guideline:

- 4× – Quick directional read.

- 8× – Balanced accuracy vs. speed.

- 16× – Highest reliability for reporting.

Supported AI Engines

BETA 2 (PRO) or later includes the latest versions of major LLMs:

- ChatGPT 4o/4.1/5/5.1

- ChatGPT Search

- Perplexity Sonar

- Google Gemini

- Anthropic Claude

- xAI Grok

- DeepSeek

- Meta Llama

Note: Google AI Mode and AI Overviews are treated as part of Google Search and are included in the SEO AI Dashboard, not RankLens engine selection.

Quick Start (2-Minute Setup)

- Create a Site – Add Domain and Brand (both are used for matching and reporting).

- Get Entities – Use existing research or click Get Entities to open Insight Igniter AI for entity discovery.

- Configure Report – Choose Language, Location, Sample Size, AI Engine, and Match Type (intent/context).

- Run Report – Start sampling; progress is shown in app.

- Review Results – Open the LLM Entity Report to read the Visibility Index, rank stats, and diagnostic metrics.

Running Your First Report

- Select your Site.

- Add or import Entities.

- Set Language and Location.

- Choose Sample Size (4×/8×/16×).

- Select AI Engine.

- Pick a Match Type.

- Run the report.

Tip: You can later Reuse Settings to iterate quickly (e.g., test a new match type or neighboring city).

Report Settings Explained

Language

The language you and your audience use affects how AIs interpret and recommend brands. Set language to match your market.

Location

Visibility is often geo-specific (city, neighborhood, state, country). Configure once, then copy to multiple locations for scale.

Sample Size

Controls accuracy vs. speed. Higher sampling slows runtime but stabilizes metrics.

AI Engine

Pick from the supported engines in §5. Switching engines can materially change results—track them separately.

Schedule

Automate recurring runs:

- Weekly: Every Monday/Tuesday/…/Sunday

- Monthly: 1st, 7th, 14th, 28th

- Once or Daily

Notifications: Enable Notify me when finished to receive an email when runs complete. This is a global account setting (not per report).

Match Intent Type

RankLens includes ~200 match types to align with user intent (e.g., “Sustainably Sourced,” “Family-Friendly,” “Lifetime Warranty,” “Latest Models,” “Easiest to Use”). For the full list see the RankLens Match Types Field Manual.

Why it matters: Prompt-only tools miss intent alignment inside LLMs. Match Intent let you analyze contextual fit beyond generic prompts.

Interpreting the Brand Visibility Report

Each run produces an entity-by-engine view with:

- Visibility Index (0–100) – Overall AI visibility.

- Rank Stats – Average, lowest, and highest observed ranks across samples.

- LLM Confidence – Confidence reported by the engine.

- Brand Discovery – Likelihood of being recommended.

- Brand Target – Precision/stability of the recommendation.

- Brand Appearance (Share of Voice) – Relative frequency of mention.

- Brand Match – Name/URL matching quality (cosine similarity across both brand and website signals).

Reading volatility: LLM results can fluctuate across runs. Use the average and range to gauge stability, then increase sampling if variance is high.

Collective Reporting (Project Level Views)

A project-level roll-up that aggregates across all reports:

- AI Visibility Accumulated – Cumulative visibility growth over time. Use to verify if generative search strategy is accruing impact.

- Total AI Visibility – Latest brand visibility across all engines at a glance.

- AI Visibility Timeline – When engines ran and how trends align with model changes or campaigns.

Competitor Comparison

For each entity, see a Top 5 comparison on:

- Average Rank

- Brand Appearance

- Brand Discovery

Use this to benchmark share and detect entrants gaining AI recommendation share.

Re-using Settings & Multi-Location Scaling

- Use Reuse Settings to duplicate a configuration, then tweak a single variable (e.g., different Match Type or city).

- For franchises or national brands, maintain a master template, then roll out to regions for consistent measurement.

Best Practices & Playbooks

Entity Strategy

- Start narrow with high-intent commercial entities, then expand.

- Map entities to buyer journeys (e.g., discovery → comparison → purchase).

Sampling

- Use 8× or 16× for client reporting and quarterly benchmarks.

- If volatility is high, increase sampling or expand timeframe.

Match Types

- Create parallel reports with Reuse Settings to test buying motives (e.g., “eco-friendly,” “budget,” “premium,” “lifetime warranty”).

Multi-Location/Language

- Clone your best-performing setup to additional cities/regions/languages for consistent measurement.

Cadence

- Schedule weekly to monitor shifts; layer monthly for executive roll-ups.

Stakeholder Views

- Use Total AI Visibility for executive summaries, Entity Detail for practitioner actions.

Troubleshooting

No/Low Results

- Entity too narrow or too broad → refine with Insight Igniter AI.

- Wrong language/location → align to audience.

High Volatility

- Increase sampling from 4× → 8×/16×.

- Extend analysis window and compare averages.

Missing Engine Columns (All Keywords view)

- Columns only appear if data exists for that engine within the project.

Notifications Not Received

- Confirm Notify me when finished is enabled (global).

- Check spam filters and account email.

Schedules Didn’t Run

- Verify schedule selection (weekday vs. monthly date).

- Ensure the project/entities remain active and not paused.

Export Looks Different Than UI

- CSV omits internal identifiers by design; PDF reflects on-screen scope.

FAQs

Q: Why don’t you include Google AI Mode / AI Overviews?

A: They are treated as part of Google Search and are available via the SEO AI Dashboard.

Q: How many samples should I use?

A: 4× for quick reads, 8× for balanced accuracy, 16× for client-grade reporting.

Q: What’s the fastest way to scale to multiple cities?

A: Configure one city, then Reuse Settings and change location.

Q: Can I compare different buying intents?

A: Yes. Use Match Types and create parallel reports (e.g., “Family-Friendly” vs. “Latest Models”).

Q: Why does my Visibility Index change after re-running?

A: LLM outputs vary; re-sampling updates averages and stabilizes long-term trends.

Q: Where can I sign up to test RankLens?

A: Create an account here.

Glossary

- Entity: Topic/semantic concept used for measurement.

- Visibility Index: 0–100 score summarizing AI visibility.

- Brand Appearance: Share of mentions across samples.

- Brand Discovery: Likelihood of being recommended.

- Brand Target: Precision/stability of the AI’s recommendation.

- Brand Match: Name/URL similarity score (cosine similarity).

- Sampling (4×/8×/16×): Number of repeated runs per configuration.

- Collective Reporting: Project-level aggregation of results.

- Match Intent Type: Intent/context lens (≈200 options) applied to entities.